download

QCG-OMPI (approx. 11MB).

QCG-OMPI is a grid-oriented MPI implementation based on

OpenMPI 1.3a1. An extended run-time environment provides

features needed to execute MPI applications on grids (clusters

of clusters, or federations of clusers), and specific

instructions are featured to allow efficient programming on

such machines.

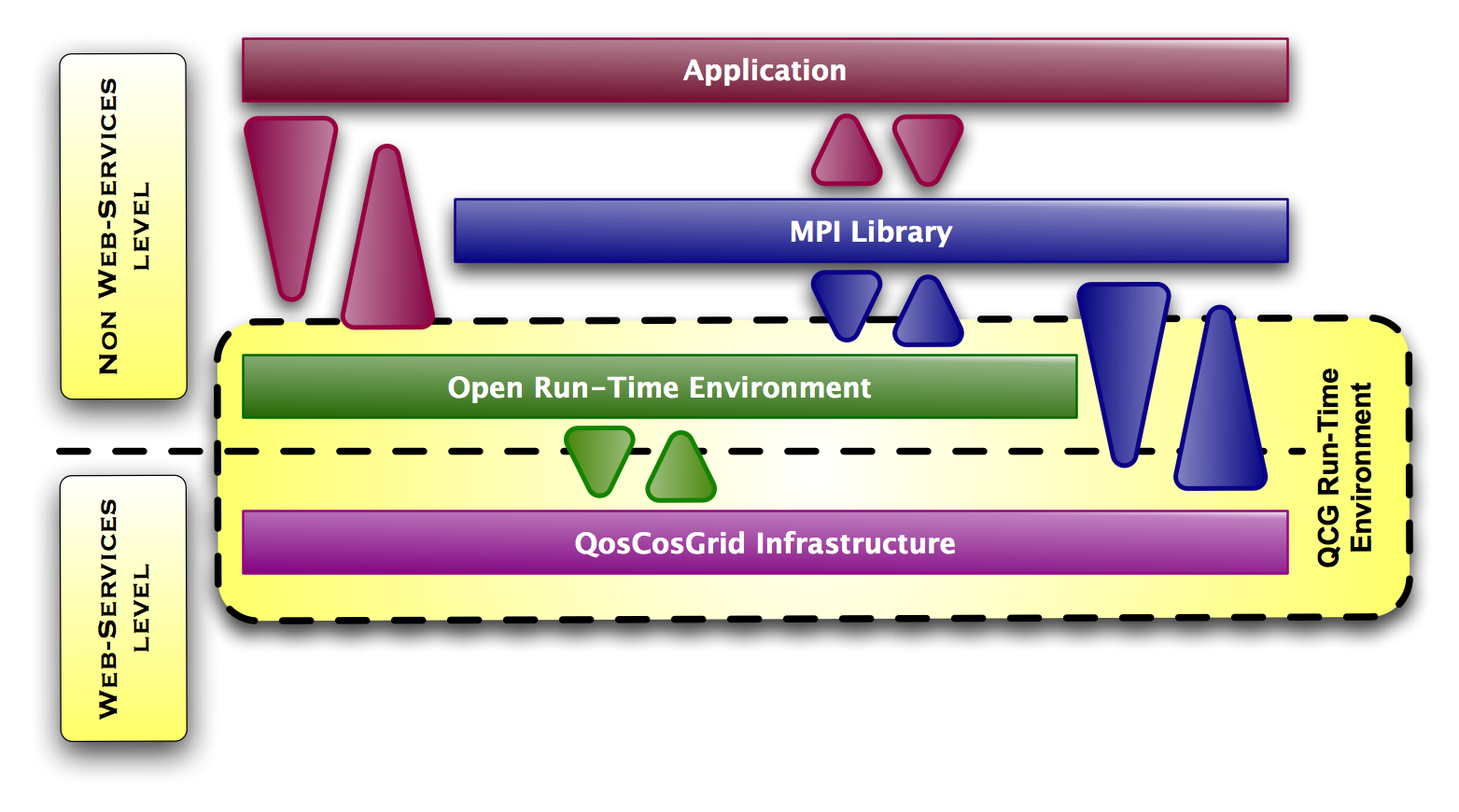

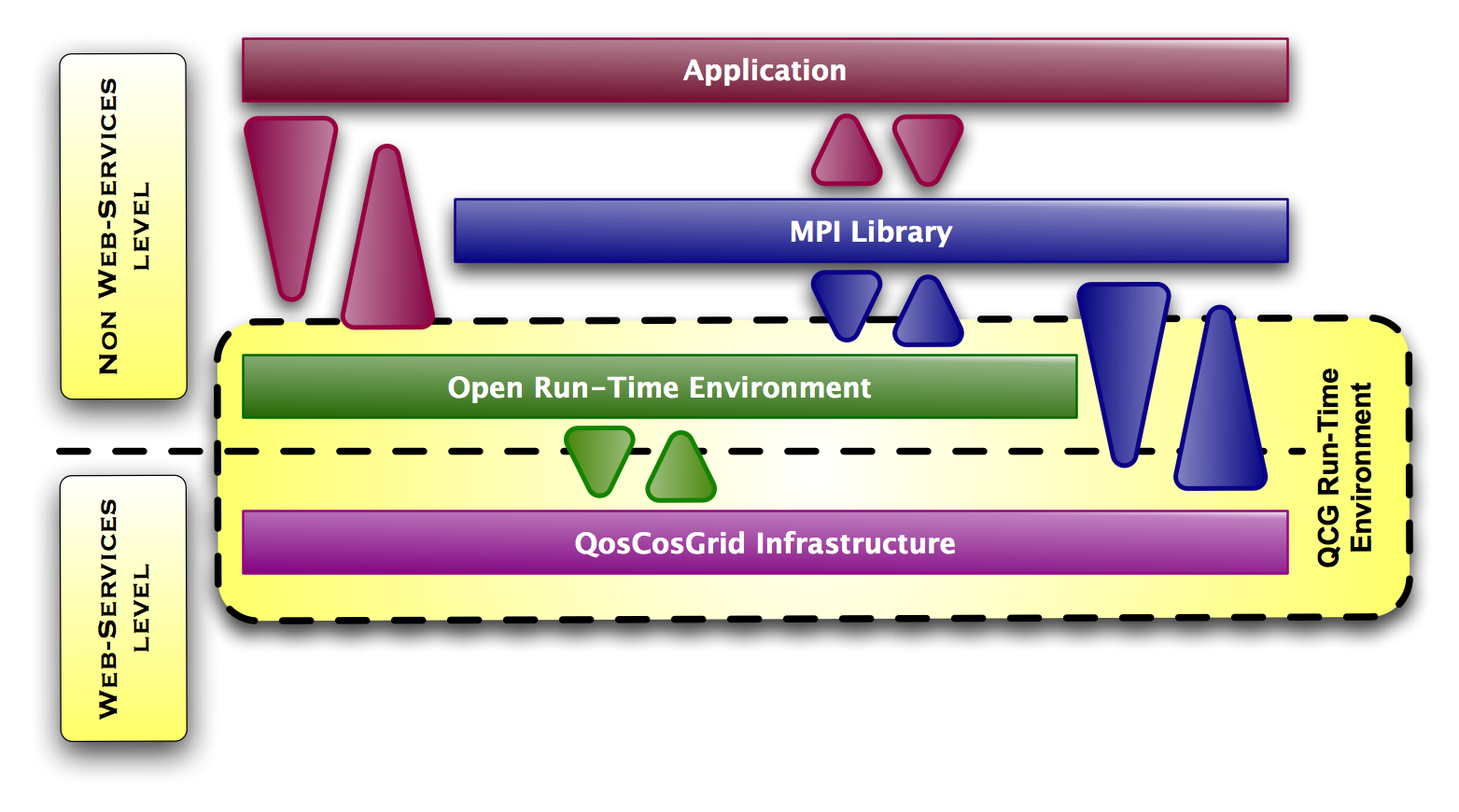

OpenMPI's run-time environment is extended by a set of

light-weight grid-services, which are part of the QosCosGrid

infrstructure.

The grid-specific features of QCG-OMPI are the following:

- Firewall and NAT bypassing

- Support for heterogeneous resources

- Run-time topology discovery

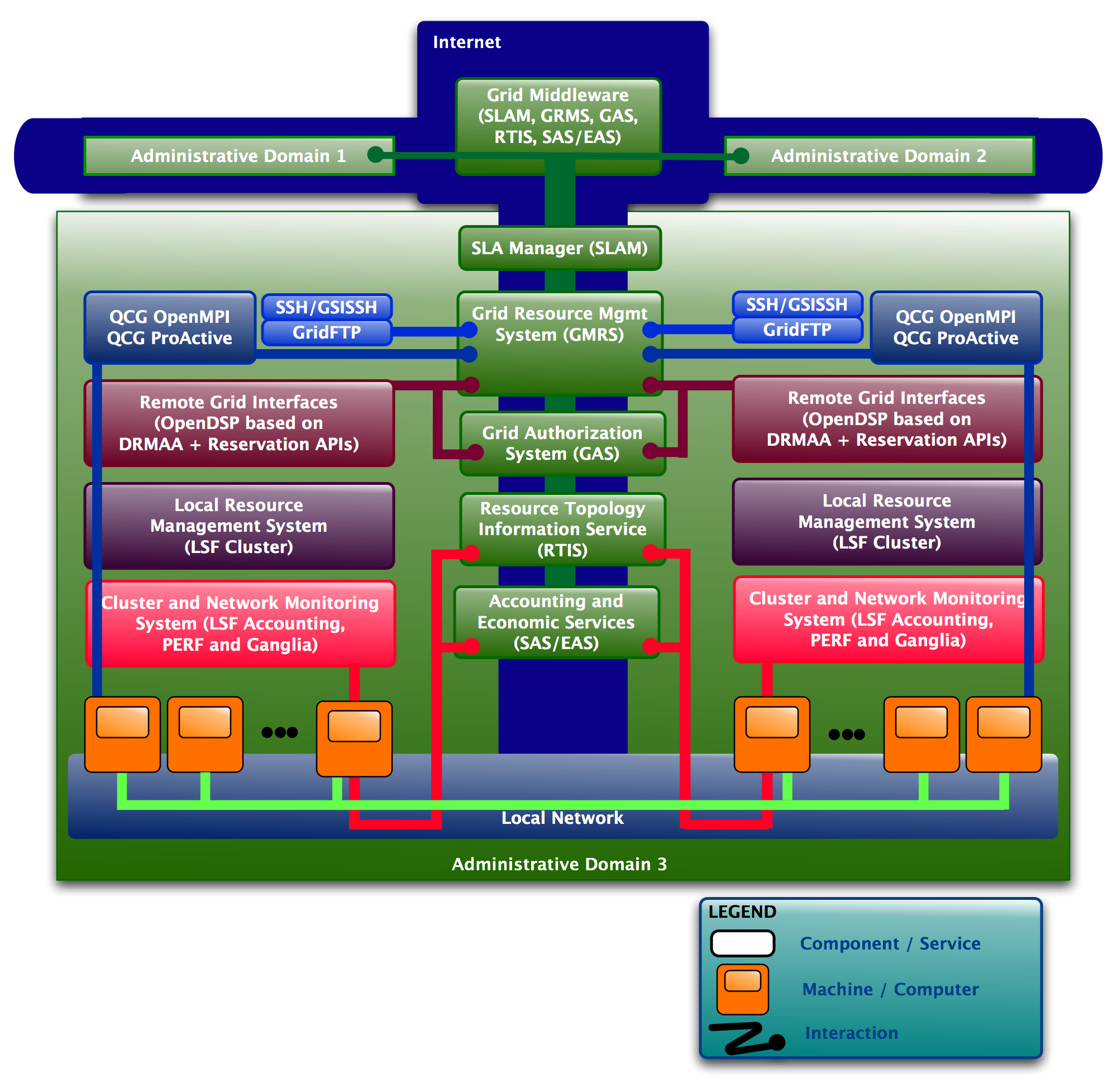

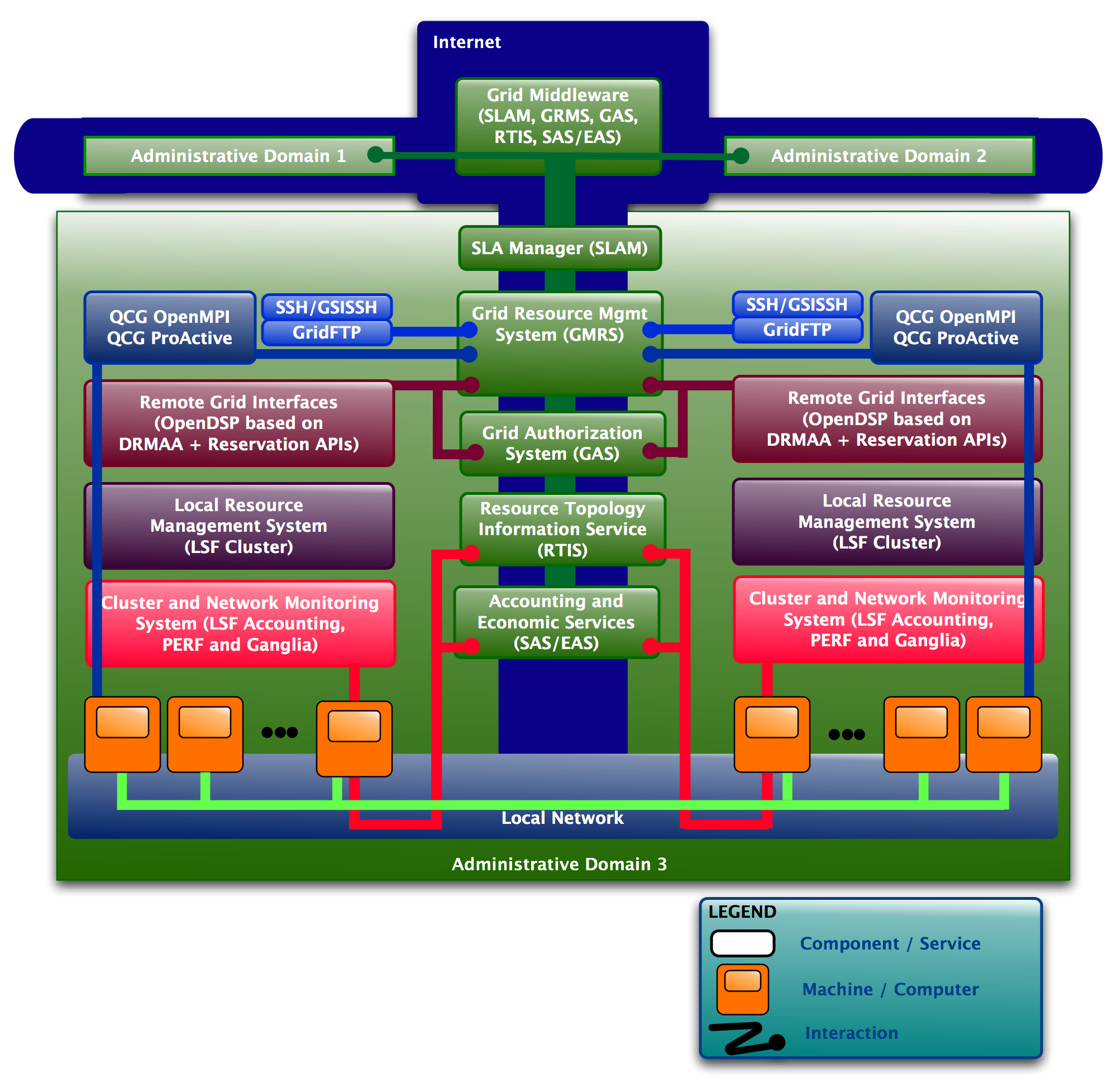

The QosCosGrid organization is based on the concept of

administrative domains. An administrative domain may be a

partner. Typically, an administrative domain is located behing

a firewall, may be using private adresses with Network Address

Translation and Port Address Translation, and uses local

system administration policies. A set of services is running

in each administrative domain, namely, from the bottom to the

top of the software stack, a monitoring system, a resource

management system, an interface for a DRMAA, and an

environment for parallel applications.

A set of higher-level services are used to coordinate

resources. For instance, a global accounting service is in

charge of counting the platform usage for users, a global

resource management system coordinates grid-level

reservations...

QCG-OMPI is targeting institutional grids: several partners

share local resources, clusters, with each other. They can

then use a larger-scale platform than what they can access

locally. Clusters are interconnected through a public network:

the Internet. Therefore, they must be protected by

firewalls. On the other hand, an application which is spanning

across the grid wants its processes to be able to communicate

with each other. Disributed computing on grids is facing two

orthogonal problems: connectivity (within applications) and

security (against the outside world).

Usual solutions consist of opening a given range of ports in

the firewall and configuring the MPI library in order to keep

connections within this range of ports. It is used by

MPICH-G2, a grid-oriented version of MPICH that relies on the

Globus Toolkit. I also wrote a patch for OpenMPI which was

integrated into the 1.3 branch in order to be able to use this

port range limitation. Another solution, used by PACX-MPI and

GridMPI, uses local relay daemons and does not need more than

one open port on the firewall. Still, it needs a leak in the

security of the clusters. Moreover, processes have to share

the bandwidth of these relay daemons, and have to go through

an extra hop.

QCG-OMPI features a set of techniques in order to be able to

establish inter-cluster connections in spite of fully

closed firewalls. These techniques were initially

developped

in PVC and were adapted to be used

in the architecture of QCG-OMPI. These techniques include:

- Direct connection: if no firewall is placed between two

processes, they communicate directly with each other.

- Port range technique: if a range of ports is open in the

firewall, communications use them.

- Reverse connection: if some processes are not located

behind any firewall, communications are reversed in order

to make other processes connect toward those

processes.

- Traversing TCP: this advanced technique is using the

TTCP protocol, developped for PVC, which consists of

re-injecting the TCP SYN packet, initially dropped by the

firewall, in the server's TCP stack. This technique

allows establishment of direct connections in spite of

firewalls.

- TCP Hole Punching: two simultaneous connections from

both sides of the firewall create a hole and establish

the connection.

- Proxy method: when none of the aforementioned techniques

can be used, communications use a relay daemon.

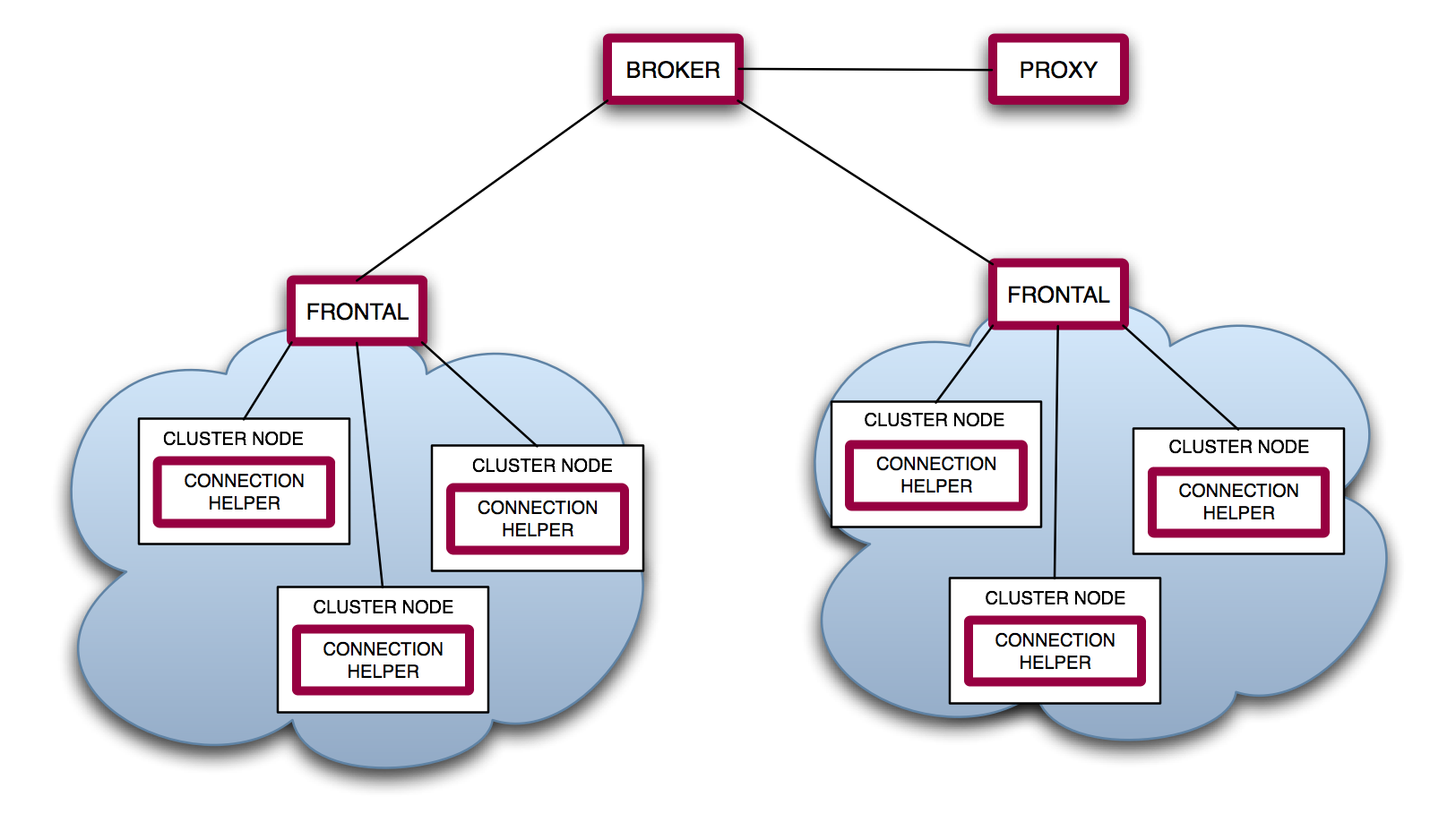

A distributed infrastructure is used to provide advanced

connectivity features. This infrastructure is made of a set of

distributed services located throughout the grid, and is

composing the grid-level part of the run-time environment of

QCG-OMPI.

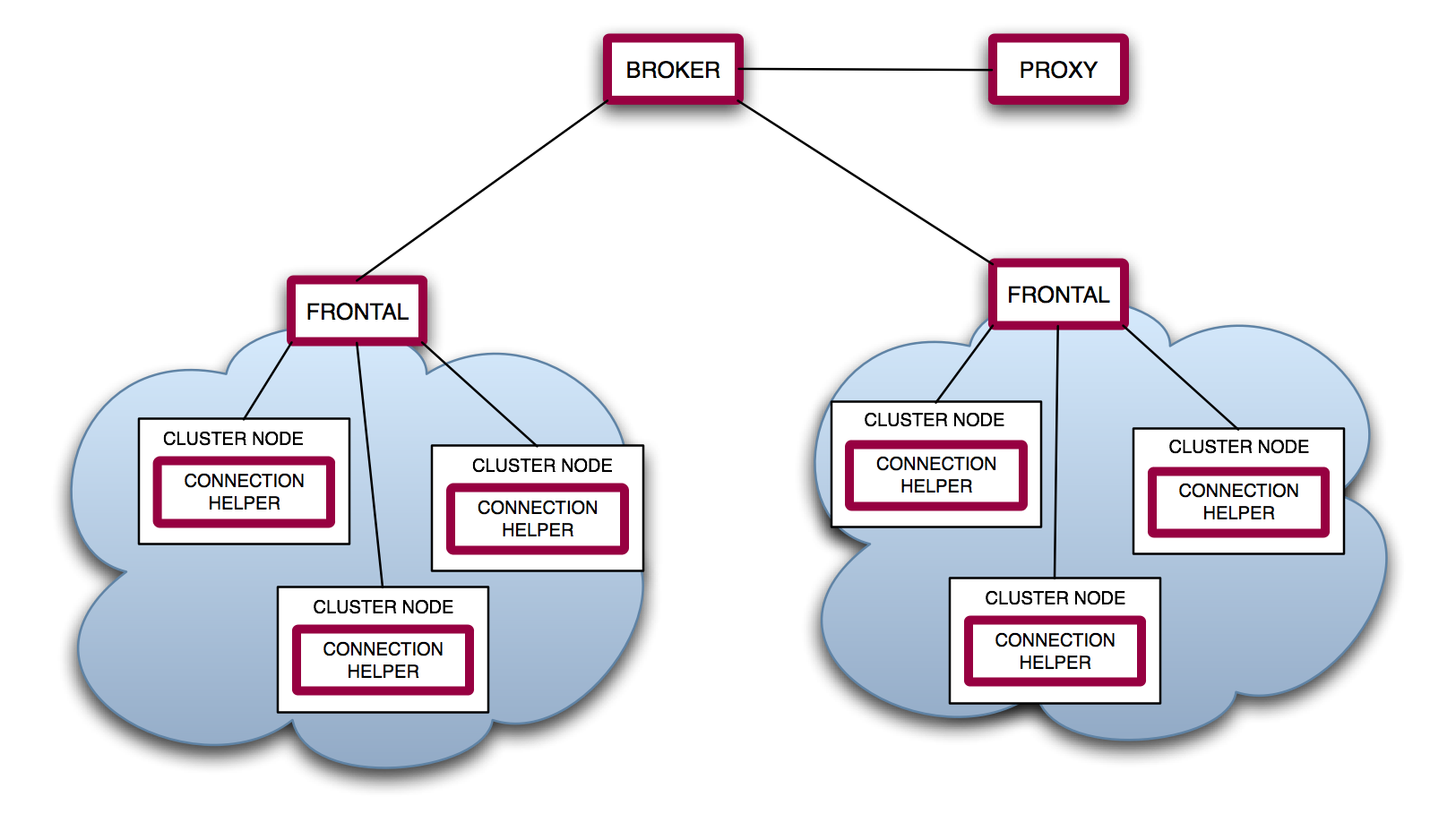

A connection helper is running on each machine of the

grid. As indicated by its name, it helps local processes to

establish connections with remote processes.

A front-end component is running on each cluster:

the frontal. Connection helpers contact their local

frontal to obtain remote processes' contact information.

The broker is the highest-level component is invoked

when frontals cannot answer a request. When it answers a

request, the frontal tht issued the requests stores this

information and acts like a cache to be able to answer further

requests from their cluster more quickly and without any call

to the broker.

The proxy is a relay daemon used when no direct

connection is possible.

This infrastructure is deployed by a grid administrator. It

does not need any specific priviledge, and services can be

executed by any user. The deployment process generates

cluster-specific parameter files (MCA parameters) used by

mpiexec to access and use the web-service level of the

run-time environment.